Why Has Cisco Brought Back Cut-Through Ethernet Switching?

Unlike

in the 1980s and 1990s, when store-and-forward switches were more than

adequate to handle application, host OS, and NIC requirements, today's

data centers often include applications that can benefit from the lower

latencies of cut-through switching, and other applications will benefit

from consistent delivery of packets that is independent of packet size.

Cisco's

successful experience with cut-through and low-latency

store-and-forward switching implementations over several years, coupled

with flexibility and performance advancements in ASIC design, have made

possible cut-through switching functions that are much more

sophisticated than those of the early 1990s. For example, today's

cut-through switches provide functions for better load balancing on

PortChannels, permitting and denying data packets based on fields that

are deeper inside the packet (for example, IP ACLs that use IP addresses

and TCP/UDP port numbers, which used to be difficult to implement in

hardware while performing cut-through forwarding).

In

addition, Cisco switches can mitigate head-of-line (HOL) blocking by

providing virtual output queue (VOQ) capabilities. With VOQ

implementations, packets destined to a host through an available egress

port do not have to wait until the HOL packet is scheduled out.

These

factors have allowed Cisco to introduce the Cisco Nexus 5000 Series

Switches: low-latency cut-through switches with features comparable to

those of store-and-forward switches.

Cut-Through Switching in Today's Data Center As

explained earlier, advancements in ASIC capabilities and performance

characteristics have made it possible to reintroduce cut-through

switches but with more sophisticated features.

Advancements

in application development and enhancements to operating systems and

NIC capabilities have provided the remaining pieces that make reduction

in packet transaction time possible from application to application or

task to task, to fewer than 10 microseconds. Tools such as Remote Direct

Memory Access (RDMA) 4 and host OS kernel bypass5

present legitimate opportunities in a few enterprise application

environments that can take advantage of the functional and performance

characteristics of cut-through Ethernet switches that have latencies of

about 2 or 3 microseconds.

Ethernet switches with low-latency characteristics are especially important in HPC environments.

Latency Requirements and High-Performance Computing HPC,

also known as technical computing, involves the clustering of commodity

servers to form a larger, virtual machine for engineering,

manufacturing, research, and data mining applications.

HPC

design is devoted to development of parallel processing algorithms and

software, with programs that can be divided into smaller pieces of code

and distributed across servers so that each piece can be executed

simultaneously. This computing paradigm divides a task and its data into

discrete subtasks and distributes these among processors.

At

the core of parallel computing is message passing, which enables

processes to exchange information. Data is scattered to individual

processors for computation and then gathered to compute the final

result.

Most

true HPC scenarios call for application-to-application latency

characteristics of around 10 microseconds. Well-designed cut-through as

well as a few store-and-forward Layer 2 switches with latencies of 3

microseconds can satisfy those requirements.

A

few environments have applications that have ultra-low end-to-end

latency requirements, usually in the 2-microsecond range. For those rare

scenarios, InfiniBand technology should be considered, as it is in use

in production networks and is meeting the requirements of very demanding

applications.

HPC applications fall into one of three categories:

• Tightly coupled applications: These applications are characterized by their significant interprocessor communication (IPC) message exchanges among the computing nodes. Some tightly coupled applications are very latency sensitive (in the 2- to 10-microsecond range). • Loosely coupled applications: Applications in this category involve little or no IPC traffic among the computing nodes. Low latency is not a requirement. • Parametric execution applications: These applications have no IPC traffic. These applications are latency insensitive. The category of tightly coupled applications require switches with ultra-low-latency characteristics.

Enterprises that need HPC fall into the following broad categories:

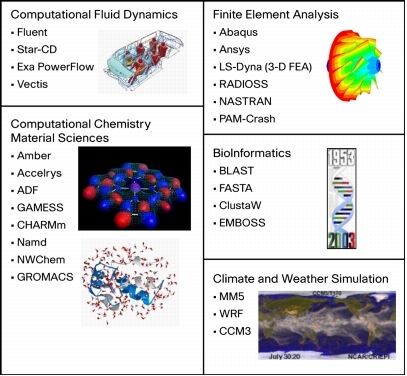

• Petroleum: Oil and gas exploration • Manufacturing: Automotive and aerospace • Biosciences • Financial: Data mining and market modeling • University and government research institutions and laboratories • Climate and weather simulation: National Oceanic and Atmospheric Administration (NOAA), Weather Channel, etc. Figure 4 shows some HPC applications that are used across a number of industries.

Figure 4. Examples of HPC Applications  |

手机版|小黑屋|BC Morning Website ( Best Deal Inc. 001 )

GMT-8, 2025-12-14 01:07 , Processed in 0.013751 second(s), 17 queries .

Supported by Best Deal Online X3.5

© 2001-2025 Discuz! Team.